As open-domain question answering, customer chatbots, and enterprise search platforms grow more sophisticated, it’s become increasingly clear that simply building Retrieval-Augmented Generation (RAG) systems isn’t enough. The magic of combining retrieval and generative models must be paired with rigorous evaluation and ongoing monitoring. Without these vital ingredients, you risk deploying a system that hallucinates information, returns incomplete results, or fails to adapt to real-world usage—leading to wasted resources, poor user experiences, and organizational headaches. Welcome to the journey of deploying RAG without regret.

What Is Retrieval-Augmented Generation (RAG)?

At its core, RAG is a technique that merges the power of search with AI language models. Instead of generating answers from scratch, RAG systems first retrieve relevant context from a knowledge base and then generate responses using that context. This dramatically boosts the factual grounding of outputs, making LLMs (Large Language Models) more reliable and informative over broad knowledge domains.

However, this retrieval-generation pipeline introduces new complexities that traditional language model evaluation techniques can’t always capture. So how do you ensure your RAG system is performant, trustworthy, and scalable?

The Importance of Evaluation in RAG

Unlike pure generative models, RAG systems are only as good as both their retrieval engine and their language generation component. Evaluation must therefore cover multiple dimensions:

- Retrieval Precision: Are you retrieving the most relevant documents or passages?

- Relevance and Coverage: Is the retrieved information actually helpful and sufficiently covers the query topic?

- Faithfulness: Does the generated output accurately reflect the retrieved content, without adding hallucinated facts?

- Fluency: Is the response well-structured and coherent?

Each of these criteria requires specialized tactics. Automatic metrics can help, but they often paint an incomplete picture. Herein lies a great opportunity—and a pitfall if you’re not careful.

Common Pitfalls in RAG Evaluation

RAG pipelines sound straightforward, but devils hide in the details. Here are some common mistakes that teams make during evaluation:

- Over-reliance on automated metrics: Metrics like ROUGE or BLEU may indicate surface-level overlap with expected answers but don’t capture deep factual correctness.

- Ignoring retrieval metrics: If the retrieved documents are irrelevant, your answer generation is operating on faulty ground. You cannot fix a broken base with better generation alone.

- No human-in-the-loop validation: Real users care more about usefulness than token similarity. Human evaluation is vital to validate RAG output in real-world settings.

Don’t let these blind spots undermine your system. RAG demands a new playbook for evaluation—and we’ll show you how to build one.

Multi-Level Evaluation Strategy

To evaluate your RAG system effectively, consider using a multi-level framework that incorporates both automatic and manual methods:

1. Evaluate the Retriever

- Top-k Accuracy: Check if the ground-truth answer is present in the top-k results.

- Document recall and ranking: Tools like BM25, Dense Passage Retrieval (DPR), and hybrid methods need consistent testing over datasets like Natural Questions or curated enterprise datasets.

2. Evaluate the Generator

- Answer correctness: LLMs can paraphrase or reformulate the truth. Verification of factual accuracy is essential.

- Answer completeness: Make sure the generated text includes all relevant data from the retrieved chunks.

3. Holistic Judgments

This includes end-to-end judgment by human annotators on axes like:

- Helpfulness

- Coherence

- Informativeness

- Faithful citation of retrieved material

Monitoring RAG in Real Time

Once your system is live, it’s not the end of the road—it’s the beginning of a critical new phase: continuous monitoring. Just like any data-centric application, a RAG system’s performance can drift due to changes in user intent, language use, or knowledge base content.

What to Monitor

Here are some of the essential monitoring dimensions:

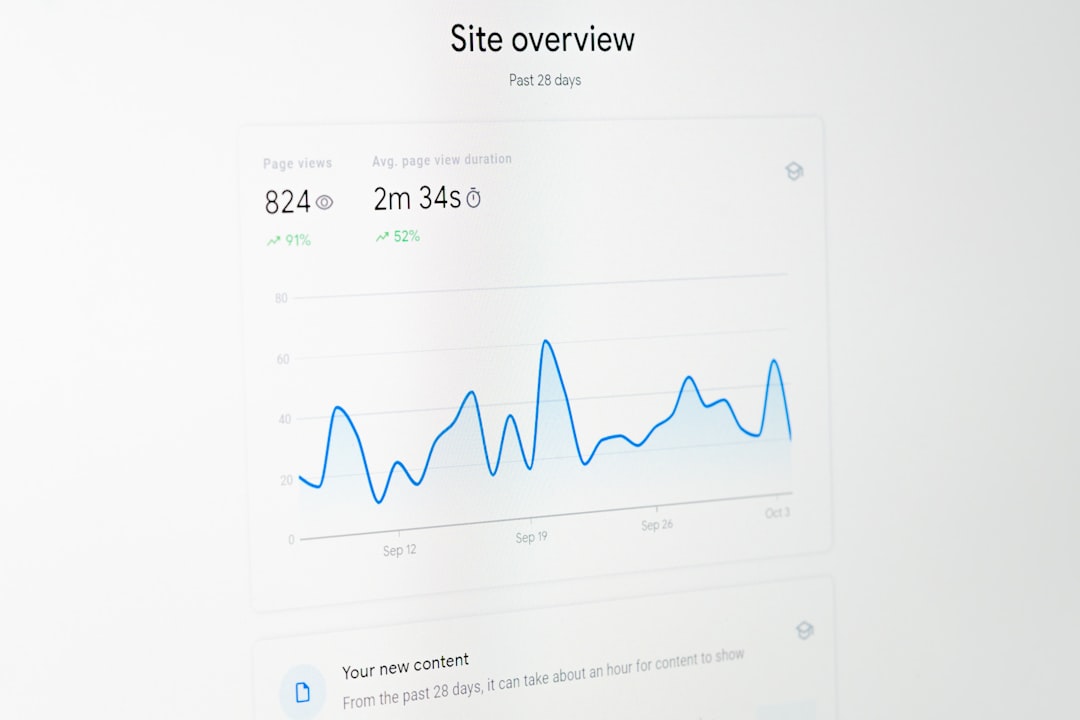

- Query Success Rate: Percentage of queries that result in helpful answers based on confidence thresholds or user feedback.

- Latency: Time taken for retrieval plus generation—this directly correlates with user experience.

- Retrieval Hit Rate: How often does the retriever return at least one high-quality, relevant document?

- Hallucination Incidents: Use prompt probes, watermarking, or user reports to detect when the LLM strays from the facts.

Feedback Loops

Establish mechanisms for feedback collection. Whether through thumbs-up/down interfaces, detailed logging, or session analysis, user behavior reveals how well your system is performing in the real world.

Red Teaming and Edge Cases

Proactively test your RAG system by simulating edge scenarios—garbled inputs, vague questions, or queries lacking support in the index. Build resilience by failing gracefully and flagging edge cases for human review.

Best Practices for Long-Term RAG Success

Here are strategic guidelines to avoid common pitfalls and ensure your RAG deployments age gracefully:

- Version your retrieval and indexing system: Data drift is real. Regularly reindex your corpus and track the performance difference between versions.

- Deploy shadow testing: Route a portion of user traffic to experimental retrievers or generators and monitor differences. Use A/B testing when stakes are high.

- Incorporate embedding drift detection: Periodically re-encode documents to catch embedding mismatches introduced by evolving language models.

- Establish a regression suite: Maintain a set of queries and expected responses to test before every deployment or model switch.

Evaluating Trust and Quality at Scale

The ultimate goal is to build a scalable quality system. Manual evaluations won’t suffice at high volumes. Use intelligent automation where possible:

- Trustworthiness scoring algorithms: Estimate factual consistency based on alignment with retrieved documents.

- Confidence estimation: Use log-probabilities or classifier ensembles to assign a trust score to each response.

- Self-consistency tests: Query the model multiple times with paraphrased prompts to confirm answer stability.

Enterprise deployments also benefit from redaction and compliance monitoring, especially when RAG systems handle sensitive data. Always include audit trails and access controls around document re-indexing and model updates.

Conclusion: RAG Without Regret

When executed well, RAG systems elevate language models into trustworthy, versatile assistants across healthcare, internal business operations, customer support, and more. But to achieve this, you need a deep commitment to evaluation and monitoring at every step.

In short: Design for trust, test for completeness, and monitor for change. With the right strategy, RAG can be more than just a clever acronym—it can be your ticket to scalable AI without the regret.